The artificial intelligence chip sector has undergone a remarkable transformation since the early 2010s, evolving from niche accelerators for research labs to indispensable powerhouses fueling the global AI revolution. What began with rudimentary GPUs repurposed for machine learning has matured into a multi-billion-dollar ecosystem dominated by custom silicon, where breakthroughs in process technology and architecture now dictate the pace of innovation.

This foundation sets the stage for 2026, when the market is forecast to exceed $100 billion in revenue—a critical inflection point driven by explosive demand for generative AI accelerators in data centers and cloud infrastructure. Leading foundries and integrated device manufacturers are accelerating production of 2nm-class processes, chiplet architectures, and high-bandwidth memory to satisfy hyperscalers’ insatiable needs, with memory and logic segments poised for double-digit growth rates.

Explosive Market Growth and AI Demand

This surge reflects the broader maturation of AI hardware, where custom silicon has evolved from experimental prototypes to mission-critical infrastructure. Analysts forecast the AI chipset sector to maintain compound annual growth rates of 25% to 30% through the early 2030s, with hyperscale providers like those operating massive cloud platforms capturing the lion’s share of incremental investments. The transition underscores a fundamental shift: AI is no longer a software overlay but a hardware imperative, demanding chips optimized for trillion-parameter models, real-time inference, and energy-efficient scaling.

High-Bandwidth Memory Powers the Surge

At the core of this expansion lies high-bandwidth memory (HBM), which delivers 5 to 10 times the data throughput of traditional DDR5 solutions while maintaining competitive power profiles. This capability is indispensable for training large language models, where memory bottlenecks previously constrained progress, and has triggered a surge in DRAM capital expenditures across the supply chain. HBM3e variants, in particular, are becoming standard in next-generation accelerators, enabling terabytes-per-second bandwidth that supports the parallel processing demands of modern AI workloads.

Chiplets Redefine Accelerator Design

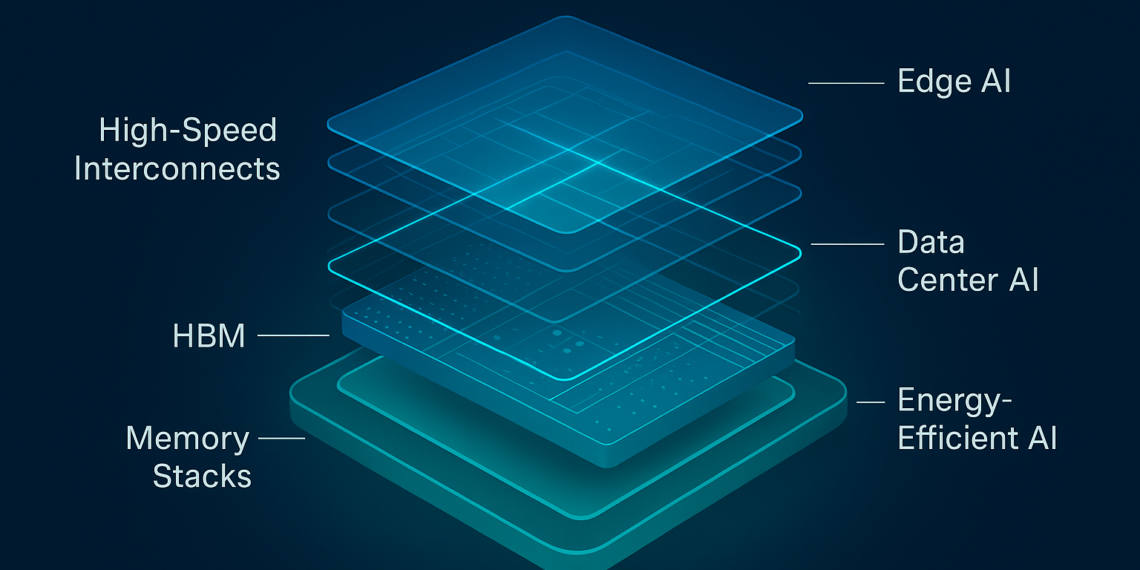

Chiplet designs represent another cornerstone, breaking away from monolithic dies to modular assemblies that integrate compute cores, memory stacks, and high-speed interconnects. Advanced packaging technologies—such as TSMC’s CoWoS, Intel’s EMIB, and TSMC’s SoIC—facilitate 2.5D and 3D integration, allowing manufacturers to achieve higher transistor densities and scalability without the yield risks of single large dies on cutting-edge nodes. This approach not only accelerates time-to-market for AI training and inference hardware but also enables customization for diverse applications, from hyperscale servers to edge deployments.

Foundry Roadmaps Target 2nm Milestone

Foundries are synchronizing their roadmaps to capitalize on this window, with gate-all-around (GAA) transistors at the 2nm process node promising 15% to 30% improvements in performance and power efficiency compared to incumbent 3nm technologies. TSMC plans to ramp its enhanced N2P node into volume production for external customers by mid-2026, targeting AI processors and high-performance computing silicon. Samsung, having initiated mass production of its 2nm GAA platform earlier this year, is advancing SF2P and SF2P+ variants to compete directly, while Intel’s 18A process enters risk production with external foundry services on the horizon.

Data Centers and Edge AI Applications

Data centers will dominate the revenue picture, potentially accounting for half of all processor spending by the middle of the decade as enterprises prioritize scalable AI infrastructure. Custom ASICs and system-on-chips (SoCs) are also proliferating at the edge, powering applications in automotive advanced driver-assistance systems, industrial automation, and consumer devices like smartphones and wearables. These edge solutions leverage slightly relaxed process nodes for cost efficiency while delivering on-device inference capabilities that reduce latency and cloud dependency.

Risks and Long-Term Outlook

Despite the optimism, industry leaders navigate significant headwinds. Geopolitical tensions, including U.S. export controls on advanced semiconductors and ongoing U.S.-China trade frictions, complicate global supply chains and investment decisions. Potential overcapacity in leading-edge fabs looms as a risk if AI hype moderates, though sustained demand from generative AI applications appears likely to absorb expansions. Startup funding in the sector has already surpassed $7.6 billion, signaling robust investor confidence amid rapid technological iteration.

Looking further ahead, forecasts indicate data center AI chips alone could surpass $400 billion by 2030, cementing semiconductors as the foundational enabler of the AI economy. For electronics manufacturers, investors, and policymakers, 2026 represents not just a revenue milestone but a strategic crossroads where technological leadership will determine competitive positioning in an AI-dominant future.